Webinar Recap – Three Ways to Slash Your Enterprise Cloud Storage Cost

The above is a recording and follows is a full transcript from the webinar, “Three Ways to Slash Your Enterprise Cloud Storage Cost.” You can download the full slide deck on Slideshare.

My name is Jeff Johnson. I’m the head of Product Marketing here at Buurst.

In this webinar, we will talk about three ways to slash your Enterprise cloud storage cost.

Companies trust Buurst for data performance, data migration, data availability, data control and security, and what we are here to talk about today is data cost control. We think about the storage vendors out there. The storage vendors want to sell more storage.

At Buurst, we are a data performance company. We take that storage, and we optimize it; we make it perform. We are not driven or motivated to sell more storage. We just want that storage to run faster.

We are going to take a look at how to avoid the pitfalls and the traps the storage vendors use to drive revenue, how to prevent being charged or overcharged for storage you don’t need, and how to reduce your data footprint.

Data is increasing every year. 90% of the world’s data has been created over the last two years. Every two years, that data is doubling. Today, IT budgets are shifting. Data centers are closing – they are trying to leverage cloud economics – and IT is going to need to save money at every single level of that IT organization by focusing on this data, especially data in the cloud, and saving money.

We say now is your time to be an IT hero.

There are three things that we’re going to talk about in today’s webinar.

We are going to be looking at all the tools and capabilities that you have for on-premises solutions and moving those into the cloud and trying to figure out which of those solutions are already in the cloud or not.

We’ll be taking a look at reducing the total cost of acquisition. That’s just pure Excel Spreadsheet cloud storage numbers, which cloud storage to use that don’t tax you on performance—speaking of performance, reducing the cost of performance because some people want to maintain performance but have less expense.

I bet you we could even figure out how to have better performance with less costs. Let’s get right down into it.

Reducing that cost by optimizing Enterprise data

We think about all of these tools and capabilities that we’ve had on our NAS, on our on-premise storage solutions over the years. We expect those same tools, capabilities, and features to be in that cloud storage management solution, but they are not always there in cloud-native storage solutions. How do you get that?

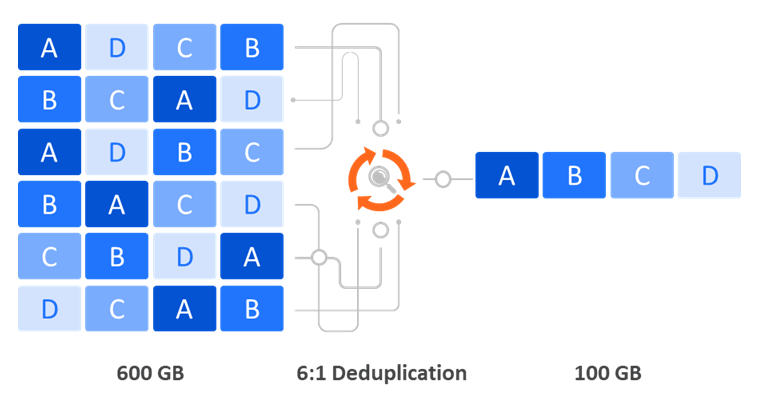

Well, that’s probably pretty easy to figure out. The first one we’re going to talk about is deduplication. This is inline deduplication. The files are compared block to block and see which ones we can eliminate and just leave a pointer there. To the end-user, they think they have the file, but it’s just a duplicate file.

We could eliminate…in most cases reduce that data storage by 20 to 30% less storage, and this becomes exponentially critical in our cloud storage.

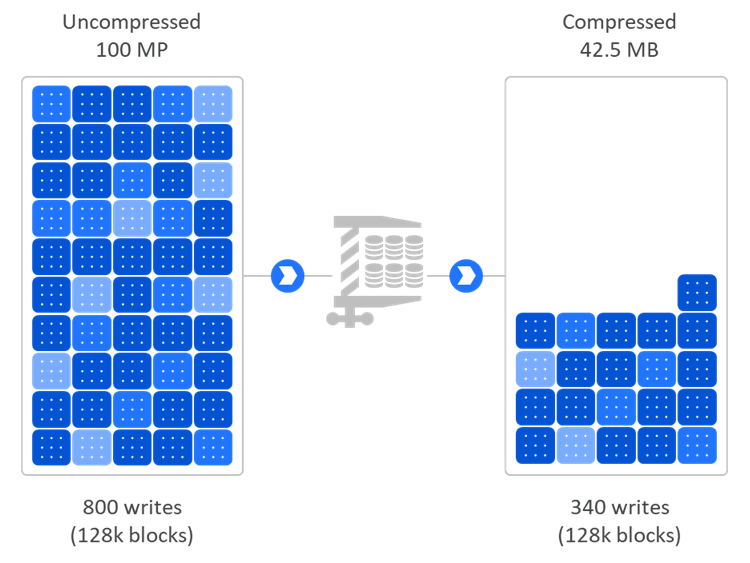

The next one we have is compression. Well, with compression, we are going to reduce the numbers of bits needed to represent that data. Typically, we can reduce the storage cost by 50 to 75% depending on the types of files that are out there that can be compressed, and this is turned on by default with our SoftNAS program.

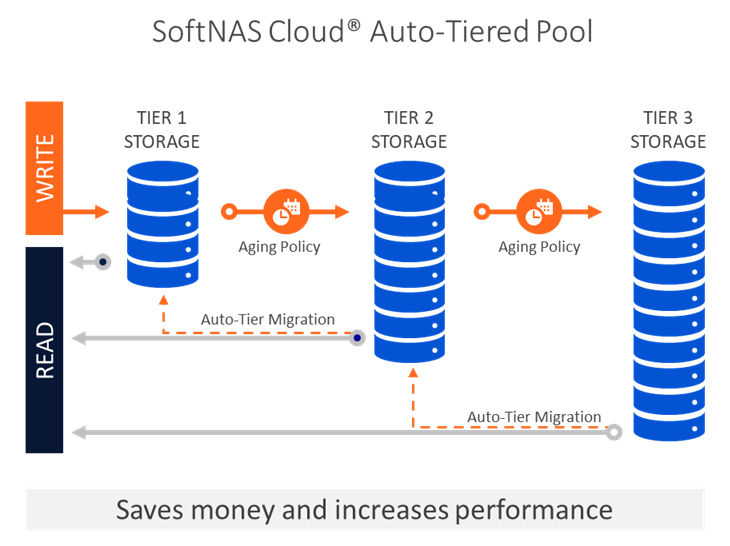

The last one we want to talk about is Data Tiering. 80% of data is rarely used past 90 days, but we still need it. With SoftNAS, we have data tiering policies or aging policies that can move me from more expensive, faster storage to less expensive storage, to even way back to ice-cold storage.

We could gain some efficiency in this tiering, and for a lot of customers, we’ve reduced their Enterprise cloud storage cost with an active data set by 67%.

What’s crazy is we add all these together. If I take a look at 50 TB of storage at 10 cents per GiB, is $5,000 a month. If I dedupe that just 20%, it brings it down to $4,000 a month. Then if I compress that by 50, I can get it down to 2,000 a month. Then if I tier that with 20% SSD and 80% HDD, I can get down to $1,000 a month, reducing my overall cost by 80% from 5,000 to $1,000 a month.

Again, not everything is equal out in the cloud. With SoftNAS, obviously, we have dedup, compression, and tiering. With AWS EFS, they do have tiering – great product. With AWS FSx, they have deduplication but not compression and tiering. Azure Files doesn’t have that.

Actually, with AWS infrequent storage, they charge you to write and read from that cold storage. They charge a penalty to use the data that’s already in there. Well, that’s great.

Reducing the total cost of acquisition is just use the cheapest storage.

Now I see a toolset here that I’ve used on-premise. I’ve always used dedupe on-premises. I’ve always used compression on-premises. I might have used tiering on-premises, but it’s really like NVME type of disk, and that’s great.

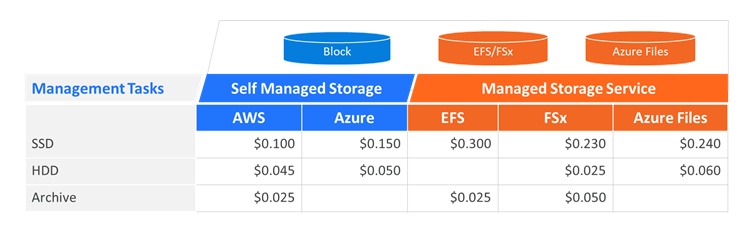

I see the value in that, but TCA is a whole different ball part here. It’s self-managed versus managed. It’s different types of disks to choose from. We take a look at this. This is like I said earlier. It’s just Excel Spreadsheet stuff — what do they charge, what do I charge, and who has the least cost.

We take a look at this in two different kinds of buckets. We have self-managed storage like NVME disks and block storage. We have managed-storage as a service like EFS FSx and Azure Files.

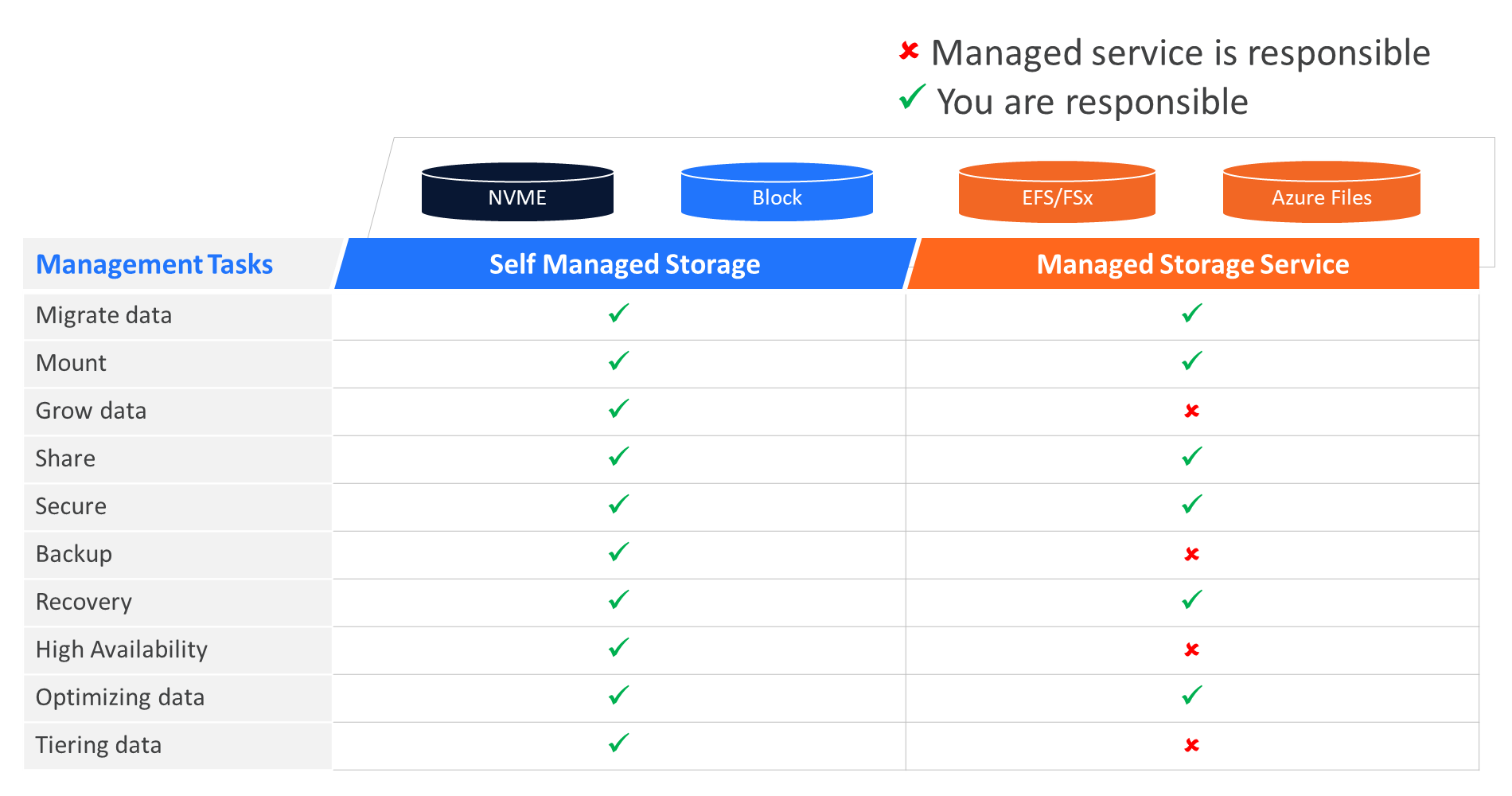

If we drill down that a little bit, there are still things that you need to do and there are things that your managed storage service will do for you. For instance, of course, if it’s self-managed, you need to migrate the data, mount the data, grow the data, share the data, secure the data, backup the data. You have to do all those things.

Well, what are you paying for because if I have a managed storage service, I still have to migrate the data? I have to mount the data. I have to share and secure the data. I have to recover the data, and I have to optimize that data. What am I really getting for in that price?

The price is, block storage, AWS is 10 cents per Gig Per month. In Azure, it’s 15 cents per Gig per month. Those things that I’m trying to alleviate like securing, migrating, mounting, sharing, recovery, I am still going to pay 30 cents – three times the price of AWS SSD; or FSx, 23 cents; or Azure File, 24 cents. I’m paying a premium for the storage, but I am still having to do a lot on the management layer of that.

If we dive a little bit deeper into all that. EFS is really designed for NFS connectivity, so my Linux clients. AWS/FSx is designed with CIFS for the Windows clients with SMB, and Azure Files with CIFS for SMB. That’s interesting.

If I have Windows and Amazon, if I have Windows and Linux clients, I have to have an EFS account and an FSx account. That’s fine. But wait a second. This is a shared access model. I’m in contention with all the other companies who have signed up for EFS.

Yeah, they are going to secure my data, so company one can’t access company two’s data, but we’re all in line for the contention of that storage. So what do they do to protect me and to give me performance? Yeah, it’s shared access.

They’ll throttle all of us, but then they’ll give us bursting credits and bursting policies. They’ll charge me for extra bursting, or I can just pay for increased performance, or I can just buy more storage and get more performance.

At best, I’ll have an inconsistent experience. Sometimes I’ll have what I expect. Other times, I won’t have what I expect – in a negative way. For sure, I’ll have all of the scalability, all the stability and security with these big players. They run a great ship. They know how to run a data center better than all on-premises data centers combined.

But we compare that to self-managed storage. Self-managed, you have a VM out there, whether it’s Linux or Windows, and you attach that storage. This is how we attached storage back in the ‘80s or ‘90s, with a client-server with all its attached storage. That wasn’t a very great way to manage that environment.

Yeah, I had dedicated access, consistent performance, but it wasn’t very scalable. If I wanted to add more storage, I had to get a screwdriver, pop the lid, add more disks, and that is not the way I want to run a data center. What do we do?

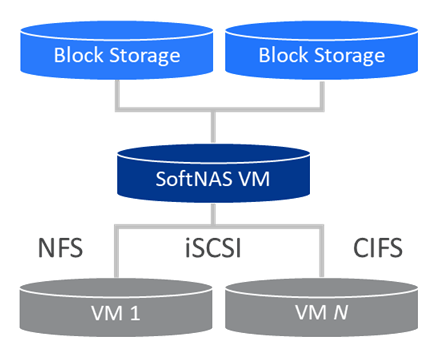

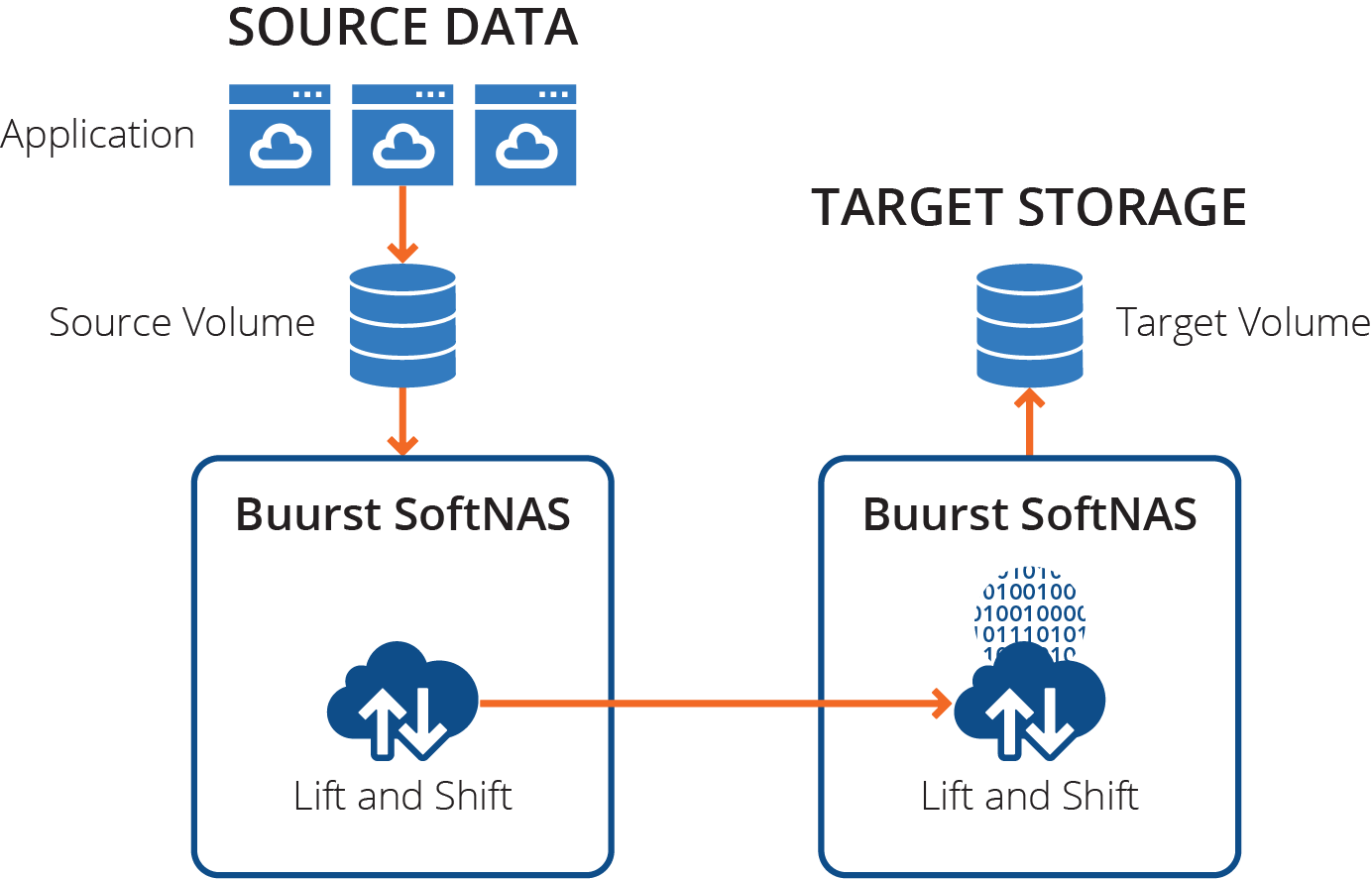

We put a NAS in between all of my storage and my clients. We’re doing the same thing with SoftNAS in the cloud. With SoftNAS, we have an NFS protocol, CIFS protocol, or we use iSCSI to connect just the VMs of my company to the NAS and have the NAS manage the storage out to the VMs. This gives me dedicated access to storage, a consistent and predictable performance.

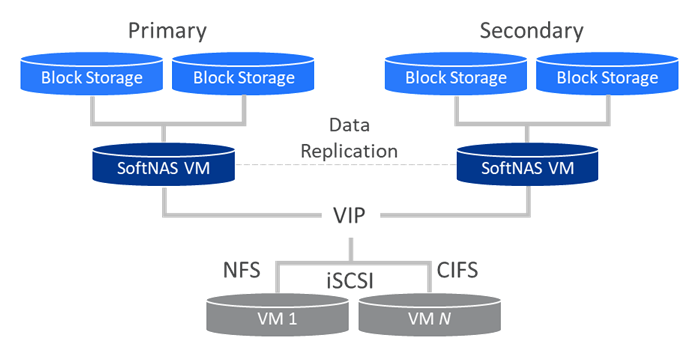

The performance is dictated by the NAS. The bigger the NAS, the faster the NAS. The more RAM and the more CPU the NAS has, the faster it will deliver that data down to the VMs. I will get that Linux and Windows environment with scalability, stability, and security. Then I can also make that highly available.

I can have duplicate environments that give me data performance, data migration, data cost control, data availability, data control, and security through this complete solution. But you’re looking at this and going, “Yeah, that’s double the storage, that’s double the NAS.” How does that work when you’re talking about Excel spreadsheets kind of data?

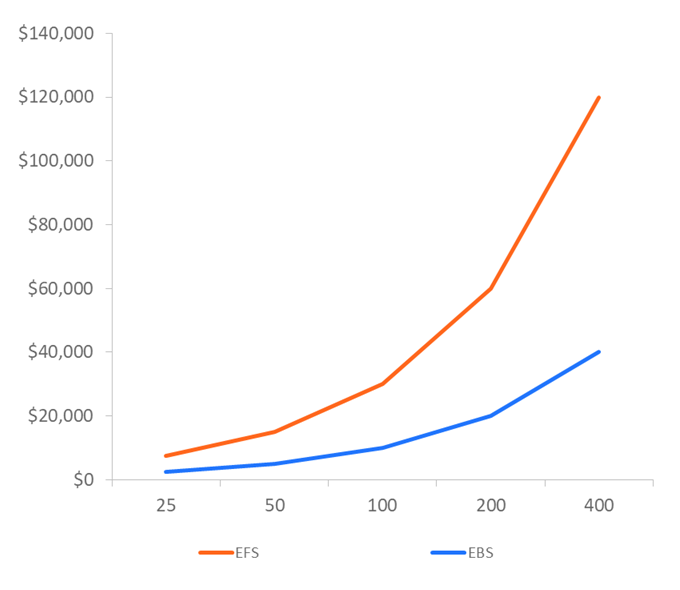

Alright. We know that EBS storage is 10 cents per GiB per month. EFS storage is 30 cents per GiB per month. The chart is going to expand with the more…two more terabytes I have in my solution.

If I add a redundant set of block storage, redundant set of VMs, and then I turn on dedupe and depression, and then I turn on my tiering, the price of the SoftNAS solution is so much smaller than what you pay for storage. It doesn’t affect the storage cost that much. This is how we’re able to save companies huge amounts of money per month on their storage bill.

This could be the single most important thing you do this year because most of the price of a cloud environment is the price of the storage, not the compute, not the RAM, not the throughput. It’s the storage.

If I can reduce and actively manage, compress, optimize that data and tier it, and use cheaper storage, then I’ve done the appropriate work that my company will benefit from. On the one hand, it is all about reducing costs, but there is a cost to performance also.

Reducing the Cost of Performance

No one’s ever come to me and said, “Jeff, will you reduce my performance.” Of course not. Nobody wants that. Some people want to maintain performance and lower costs. We can actually increase performance and lower costs. Let me show you how that works.

We’ve been looking at this model throughout this talk. We have EBS storage at 10 cents with a NAS, a SoftNAS between the storage and the VMs. Then we have this managed storage like EFS with all of the other companies in contention with that storage.

It’s like me working from home, on the left-hand side, and having a consistent experience to my hard drive from my computer. I know how long it takes to boot. I know how long it takes to launch an application. I know how long it takes to do things.

But if my computer is at work in the office and I had to hop in a freeway, I’m in contention with everybody else who’s going to work who also needs to work on their hard drive at the computer in their office. Some days the traffic is light and fast, some days it’s slow, some days there’s a wreck, and it takes them twice as long to reach there. It’s inconsistent. I’m not sure what I am paying for.

If we think about what EFS does for performance, and this is based on their website, you get more performance or throughput with more storage that you have. I’ve seen some ads and blog articles that a lot of developers.

They say, “If I need 100 MB of throughput for my solution and I only need one terabyte worth of data, I’ll put an extra terabyte of dummy data out there on my share so that I can get the performance I want.” I put another terabyte at 30 cents per GiB per month that I’m not even going to use just to get the performance that I need.

Then there’s bursting, then there is throttling, and then it gets confusing. We are so focused on delivering performance. SoftNAS is a data-performance company. We have levels or scales of performance, 200, 400, 800, to 6,400. Those relate to throughput, so the throughput and IOPS that you can expect for the solution.

We are using storage that’s only 10 cents per GiB on AWS. It’s a dedicated performance that you can determine the performance you need and then buy that solution. On Azure, it’s a little bit different. Their denominator for performance is of vCPUs. A 200 is a 2 vCPU. A 1,600 is a 20 vCPU. Then we publish the IOPS and throughput that you can expect to have for your solution.

Of course, reducing cost performance, use a NAS to deliver the storage in the cloud. Use a predictable performance. Use attached storage with a NAS. Use a RAID configuration. You can focus on read and write cache even with different disks that you use or with a NAS on the amount of RAM that you use.

Pay for performance. Don’t pay more for the capacity to get the performance. We just took a real quick look at three ways to slash your storage cost – optimizing that storage with dedupe, compression, and tiering, making less expensive storage work for you, right, and then reducing the cost of performance. Pay for the performance you need, not for more storage to get the performance you need.

What do you do now? You could start a free trial on AWS or Azure. You can schedule a performance assessment where you talk with one of our dedicated people who do this 24/7 to look to how to get you the most performance you can at the lowest price.

We want to do what’s right by you. At Buurst, we are a data-performance company. We don’t charge for storage. We don’t charge for more storage. We don’t charge for less storage. We want to deliver the storage you paid for.

You pay for the storage from Azure or AWS. We don’t care if you attach a terabyte or a petabyte, but we want to give you the performance and availability that you expect from an on-premises solution. Thank you for today. Thank you for your time.

At Buurst, we’re a data-performance company. It’s your time to be this IT hero and save your company money. Reach out to us. Get a performance assessment. Thank you very much.